High Availability Kubernetes Using RKE in ServerStadium Part 1

Kubernetes is an Opensource platform that manages workloads or containerized applications on servers.

Google first developed this platform. Now it’s managed by the Cloud Native Computing Foundation (CNCF).

In short, Its function is to make us easy to configure, deploy, manage, or monitor the largest scale of a containerized application in different deployment environments.

Before we talk about containers, let’s talk about Docker because Docker is a popular application that is often used with Kubernetes.

Docker is an open-source application that only uses the required program and elements that a program needs to be a complete unit as an image. We can use that image to run an application like Nginx, WordPress, etc.

The collection of these images we will call a Container.

The container will run on a pod, where the pod is the smallest deployable unit in Kubernetes.

Installing Kubernetes is tricky because there are multiple ways to install it. Also, Kubernetes deployments container creation is not limited to using docker. We can use another container runtime like CoreOS, Mesos, LXC, Podman, etc.

In RKE1 (Rancher Kubernetes Engine), we will use Docker as the container runtime to deploy Kubernetes with simple installation.

RKE1 is a CNCF-certified Kubernetes distribution that runs within Docker Container, and it will make us easier to install Kubernetes in our infrastructure with a single YAML file.

After Kubernetes has been installed, we will install Rancher to manage it.

Rancher has a nice web UI that we can use to add, manage, monitor, remove the nodes, create a deployment, create the persistent volume of Kubernetes, and many things.

In Part 1, we will deploy Kubernetes in ServerStadium Cloud as follow:

- Launch at least 3 VM on the ServerStadium Cloud for High Availability workload.

- Enable SSH passwordless login for each Kubernetes node.

- Install docker on each node.

- Download RKE binary file and configure the Kubernetes.

Launch at Least 3 VM on ServerStadium Cloud for High Availability Workload

For High Availability Kubernetes, we need three VMs as Kubernetes nodes in ServerStadium Cloud. Below is the specification for every node:

Operating System: Ubuntu 20.04

Memory: 4GB

CPU: 2 Core

Disk: 20 GB

Public IP Address only for node1 because we will install RKE from node1.

For node2 and node3, we only need the Private IP Address.

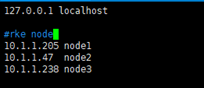

Below is the IP Address of every node:

node1 :

Public IP Address: 198.167.141.249

Private IP Address: 10.1.1.205

node2:

Private IP Address: 10.1.1.47

node3 :

Private IP Address: 10.1.1.238

Enable SSH Passwordless Login for Each Kubernetes Node

Before deploying RKE on the server, we must enable SSH passwordless for easy installation with a custom domain internal. Instead of remembering the IP Address, it’s easy to remember the node using its names.

# vim /etc/hosts

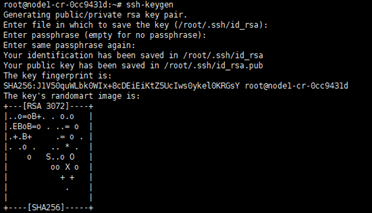

Let’s generate the ssh key pair using the following command.

# ssh-keygen

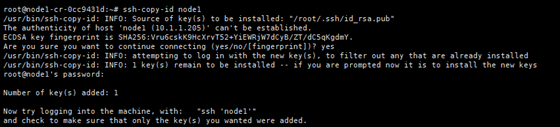

After generating the ssh keypair is completed, we can upload the Public key to each node using command ssh-copy-id

Please run the following command from node1.

# ssh-copy-id node3

# ssh-copy-id node2

# ssh-copy-id node1

Install docker on each node:

Since we need docker for Kubernetes, we will install it on every node using the following command:

Firstly, we need to update our repository and allow our server to access the Docker repository using HTTPS

sudo apt-get update && sudo apt-get install apt-transport-https ca-certificates curl software-properties-common -y

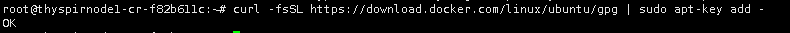

Add a GPG key to ensure the authenticity of the software package

# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

Now, we can add Docker repository to our server and then install the stable Docker version using the following command :

# sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" # sudo apt-get update && sudo apt-get install docker-ce containerd.io -y

Download RKE Binary File and Configure the Kubernetes

Before we deploy Kubernetes on the server, we need to download rke binary from the following link.

Download latest rke from https://github.com/rancher/rke/releases/

# wget https://github.com/rancher/rke/releases/download/v1.2.12/rke_linux-amd64Rename the file as rke

# mv rke_linux-amd64 rkeThen, make rke as executable file

# chmod +x rkeIn ServerStadium Cloud, we will create a simple cluster from three nodes using the following yml.

Create a simple cluster.yml

nodes:

- address: node1

user: root

internal_address: 10.1.1.205

role: [controlplane, etcd, worker]

- address: node2

user: root

internal_address: 10.1.1.47

role: [controlplane, etcd, worker]

- address: node3

user: root

internal_address: 10.1.1.238

role: [controlplane, etcd, worker]

services:

etcd:

snapshot: true

creation: 6h

retention: 24h

# Required for external TLS termination with

# ingress-nginx v0.22+

ingress:

provider: nginx

options:

use-forwarded-headers: "true"

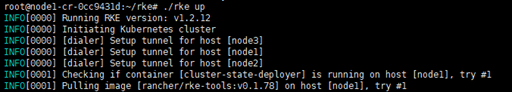

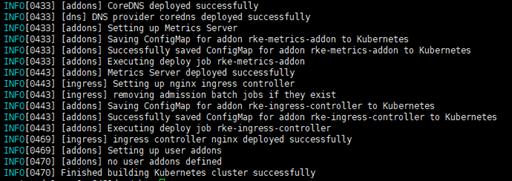

Save the file, then we can continue to deploy Kubernetes using the command rke up. The installation will take around 10-15 minutes.

# ./rke up

After the installation is completed. It will create three files, and please save them in a secure location:

rancher-cluster.yml: The RKE cluster configuration file that we have created previously.

kube_config_rancher-cluster.yml: The Kubeconfig file for the cluster.

rancher-cluster.rkestate: The Kubernetes Cluster State file

If you want to know the status of the cluster node, we can use the kubectl command.

kubectl is a command-line tool that we can use to send a command to the Kubernetes cluster.

kubectl is authenticating with the Master node and making API calls to perform various management actions.

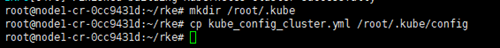

Copy the kube_config_rancher-cluster.yml as a config file. You can follow the following steps:

# mkdir /root/.kube

# cp kube_config_cluster.yml /root/.kube/config

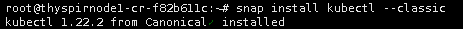

Now, install kubectl on the master node

# snap install kubectl --classic

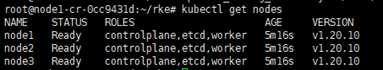

Run kubectl command to get the status of the nodes

#kubectl get nodes

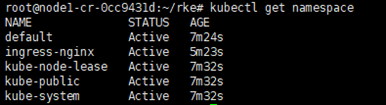

#kubectl get namespace

Now it has been deployed. Next step, we will install Rancher to manage our Kubernetes Server.

Let’s go to continue to the next part